Big Data at CERN

"As much data as Facebook"

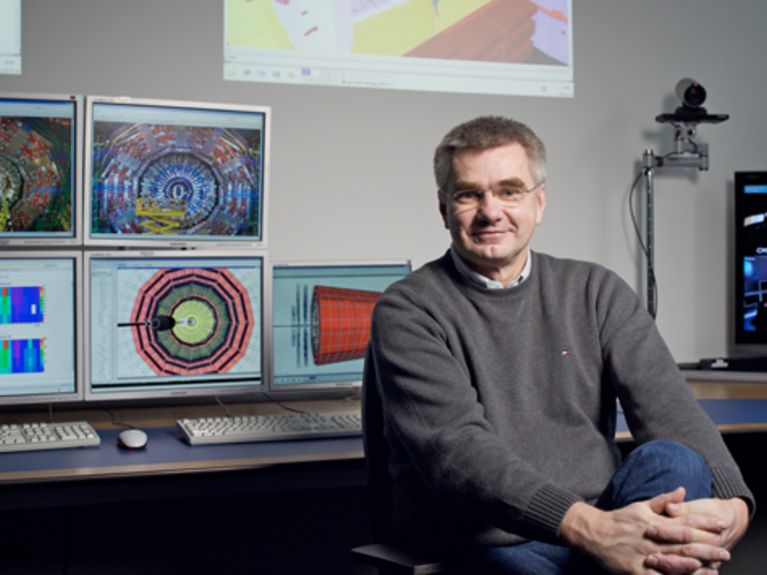

Prof. Joachim Mnich belongs to the international team of physicists who discovered the Higgs boson. Photo: DESY

Big data sounds like a contemporary phenomenon. Yet at CERN, scientists have been battling with huge amounts of data already for 20 years. In the context of our series on big data we have spoken with particle physicist Joachim Mnich from Deutsches Elektronen-Synchrotron (DESY) about petabytes, waste data and grid computing

Experiments involving large-scale research equipment, such as the world's largest particle accelerator, the Large Hadron Collider (LHC) at the European Organization for Nuclear Research CERN in Geneva, generate incredibly huge amounts of data, which need to be collected, analysed and, as the case may be, stored for a longer period of time. Management of such "big data" requires a high degree of organisational effort and special storage technology. When the so-called "God particle" was detected at CERN in July 2012, thus achieving the long sought after verification of the existence of the Higgs boson, several hundred petabytes had been analysed for that purpose – a figure that is not fathomable for most humans: one petabyte is a 1 with 15 zeroes.

Prof. Joachim Mnich belongs to the international team of physicists who discovered the Higgs boson. He is Director in charge of Particle and Astroparticle Physics at Deutsches Elektronen-Synchrotron (DESY), a research centre within the Helmholtz Association. We spoke with him about large amounts of data at the huge CERN.

What data volumes do you have to cope with?

LHC experiments produce about 20 petabytes data per year; that is 20 million gigabytes. As regards scale, this is comparable with the worldwide data volume of Facebook or YouTube. The data generated by the experiments is processed in various evaluation steps, which in turn need to be filed. Then, the data is compared to simulations to understand it in full detail.

Do you really evaluate all data? Do you review 20 petabytes per year?

Sure, that is the data we need for analysis. This already presents a selection. We actually produce far, far greater amounts of data. Directly after each collision we have to decide whether we record this data and include it in these 20 petabytes. In fact, we use only one out of three million collisions.

How do you know you have chosen the right collision?

That is the art in conducting LHC experiments. Specially designed procedures, so-called triggers, help in the selection. First, there are very simple criteria that are solved on a hardware level with fast electronics. Then, large data centres are directly connected to the experiments. Special software evaluates every single collision and decides whether it comes into consideration for further evaluation or not.

And the data from the other collisions?

Is lost forever. Of course, we do cross checks: every now and then we keep data from a collision that was actually eliminated on the software level. This way we ensure that we do not miss anything interesting.

That is like the needle in the haystack!

Finding that would be easy compared to verifying the Higgs boson.

Joachim Mnich ist Direktor für Teilchen- und Astrophysik am Deutschen-Elektronen-Synchrotron (DESY) in Hamburg, wo er bereits als Doktorand bei am PETRA-Speicherring beschäftigt war. Später wechselte er zum CERN und trat 2005 eine Stelle als Leitender Wissenschaftler bei DESY an, wo er unter anderem die CMS-Gruppe leitete. Seit Januar 2008 ist er Mitglied des DESY-Direktoriums. Bild: DESY

Where is the data kept? Where is it analysed?

These amounts of data are too gigantic for a single data centre. We already knew this 20 years ago and started thinking about a solution. This evolved into the idea of grid computing, distributing the data across the world. The backbone in Germany is formed by the Helmholtz Association's data centres – at the Karlsruhe Institute of Technology, at Deutsches Elektronen-Synchrotron (DESY) and at the GSI Helmholtz Centre for Heavy Ion Research. When a scientist plans to evaluate data, he or she sends their analysis programme to the grid. The grid then processes where computers happen to not be working to capacity.

Do you store data in reserve because you cannot yet evaluate it?

In a way, yes, although perhaps in a different way than you mean. After about two years of operation, the accelerator has a break. For instance, the LHC does not operate this year. We are currently working on improvements, exchanging defective parts, calibrating the detectors to put the finishing touches on the analyses. For this somewhat quieter period of time we have stockpiled data for less urgent analyses, which now is being evaluated. Yet in principle the storage of data without evaluation does not make any sense to us. Our science is an iterative process, that is, we need evaluation to improve the experiment.

"Big data" signifies huge changes for all scientific disciplines, only with you it never seemed to have been different. Some people postulate that "big data" will revolutionise science. Perhaps in future we will no longer need models because the data will speak to us.

If the data were to simply speak to us, we would never have found the Higgs boson. We needed an idea of what we were looking for. On the basis of the model created by Peter Higgs and his colleagues and using modern computer technology, we were able to calculate how a Higgs boson would behave in the detector. Our data matched the model. If we had just taken the data as is, we may have seen a "bump" but would not have known what it is. After 50 years we can say that the standard model of elementary physics is correct – to an extent. It describes all phenomena we can measure. And that amounts to four per cent of our universe. For the remaining 96 per cent we have new models. It remains to be seen which is the right one. Models are indispensable. This is the only way we get an idea of what the needle might look like before we search the haystack.

"Big data", grid computing, simulation. Is the future of science in the hands of computer scientists and statisticians?

Computer scientists and statisticians are indeed becoming more and more important in science: the demands on statistical evaluations are ever increasing and the network connections are increasingly complicated. Yet even in the future, any trends in science will not be determined by computer scientists and statisticians.

The idea of open science, involving citizens in scientific work, is a mega trend. How much sense does that make in your field? Is it reasonable to put this vast amount of data at the disposal of everyone?

Nobody could do anything with it. Even our students get processed data so that their exercises produce meaningful analyses. There is a reason why several thousand scientists work together on a project such as the Higgs boson. Data evaluation is highly complex. Of course, we regularly publish our results, making them accessible to everybody.

That means we should trust you to do the right thing.

Yes, that is a prerequisite for us scientists. The probability of us getting it wrong is one to two million as regards the Higgs boson. That is something you can trust in.

Readers comments